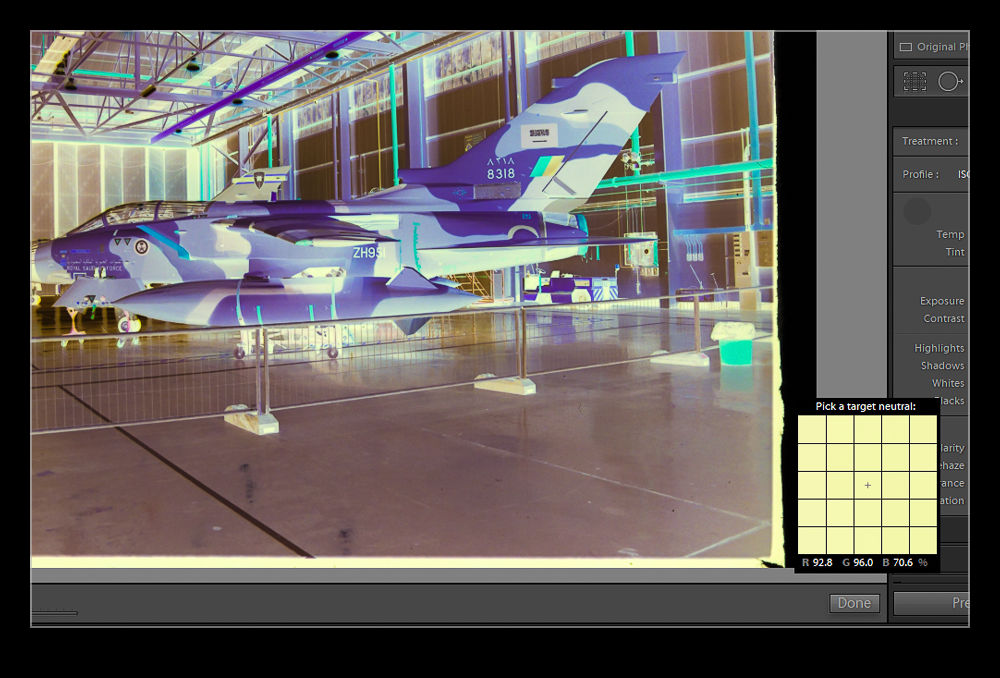

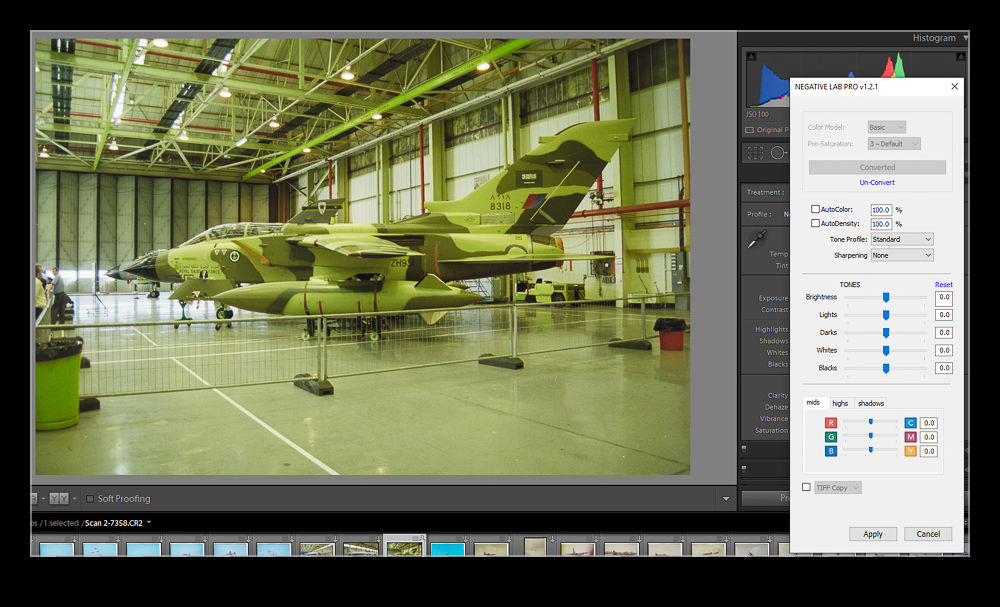

This is the finished image. This is pretty much what it looked like to the naked eye (through the viewfinder) when I took the shot given how dark the sky was.

A rare arrival was due on a day that was not good from a weather perspective. It was dull and rainy and so not what you would hope for. Conditions like this mean I try to exploit some of the features of the camera and the processing options available. First, how to set up the camera? With the light being bad and variable, I went to a pretty high ISO level. I shot in aperture priority mode and added a lot of exposure compensation.

In my experience, the metering is pretty good when shooting against the sky in clear weather but, when there is a lot of cloud, the camera tends to treat the clouds as too bright and it underexposes the subject too much. I use a lot of exposure compensation in this case with a setting of +2.0 being used on this day. The reason I do this is that, aside from the exposure question mark, there is a lot more information available in the lighter end of the exposure curve. Shooting in RAW gives you options.

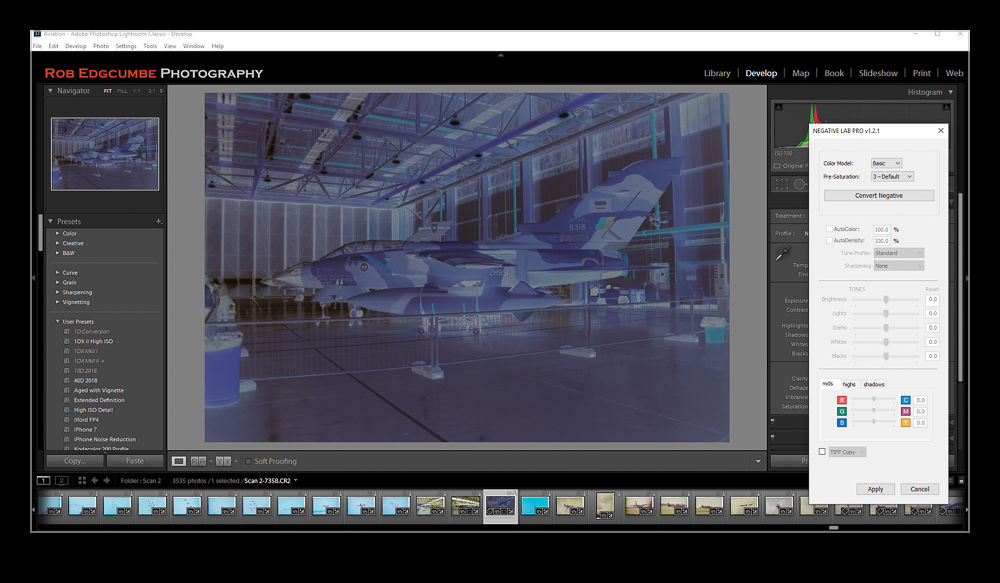

This is how the camera recorded the image. This is the in camera JPEG that I extracted from the RAW file using Instant Raw From JPEG.

If you were to look at the aircraft at the time, you would see a dark and menacing sky but you would see plenty of detail on the plane. The camera does not see that for the original shot. The aircraft would be very dark. When processing, this dark area would give you something to work with but the variation in data would be more limited. Shoot overexposed and you get more to work with.

This approach will only work well if you are shooting RAW. If you are using JPEG, too much of the usable data will be discarded during the processing in the camera. To show you what I mean, here are two images. These are both from the same shot. One is the RAW file as it showed up when imported in to Lightroom and the other is the embedded JPEG that you can extract from the RAW file and which can be seen when the file is first imported before the rendering is undertaken. As you can see, the JPEG is over exposed but the RAW rendering seems even more so.

There is way more data in the RAW file though. Immediately, as I bring the exposure slider back down, the clouds go from being white to quite dark – just as they appeared on the day. Meanwhile, the fuselage of the aircraft has a lot of the data intact and maintains a lot of the brightness that you could see at the time. Very little needs to be done with the blacks and they are almost in the right spot by the time the exposure is good for the clouds. The fuselage might be a bit too dark though. A small tweak of the blacks and a little boost in the shadows to compensate for too much darkening with the exposure slider and suddenly the shot is looking a lot more like it did when I saw it develop.

My RAW processing baseline always results in a slightly more overexposed shot the embedded JPEG includes. When you first open the image, the embedded image you see in the previous shot initially shows up and then it renders the RAW file. This was the initial RAW rendering prior to any adjustments.

One advantage of shooting on such a crummy day is that the sky is a giant softbox – in this case a very soft one! The result is that the light is a lot more even than on a sunny day. The darker look can actually make the colors look a bit more intense than if they were losing out to the whites when the sun is right on them. While there was only one plane I was specifically there for, playing around with these other shots and working on the technique was a nice extra benefit.

After four months of no hair cut, I finally managed to get some clippers and set about cleaning up my head. I won’t share the awful look that I had developed (and the current look might not be that great either) but I did have Nancy take some pictures before and after. While she was taking the after shots, I asked her to take a couple that were directly head on. I was interested in facial symmetry.

After four months of no hair cut, I finally managed to get some clippers and set about cleaning up my head. I won’t share the awful look that I had developed (and the current look might not be that great either) but I did have Nancy take some pictures before and after. While she was taking the after shots, I asked her to take a couple that were directly head on. I was interested in facial symmetry.